About

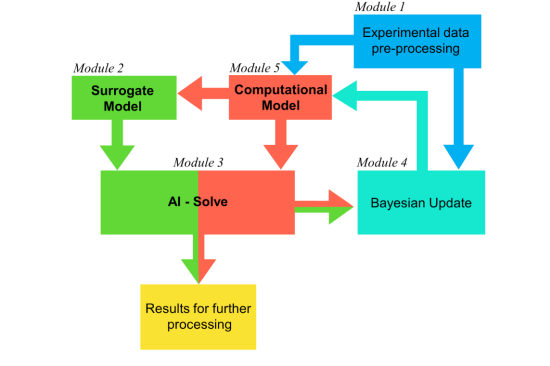

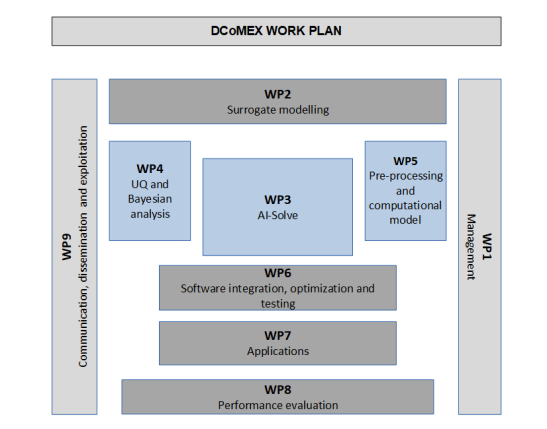

DCoMEX aims to provide unprecedented advances to the field of Computational Mechanics by developing novel numerical methods enhanced by Artificial Intelligence, along with a scalable software framework that enables exascale computing. A key innovation of our project is the development of AI-Solve, a novel scalable library of AI-enhanced algorithms for the solution of large scale sparse linear system that are the core of computational mechanics. Our methods fuse physics-constrained machine learning with efficient block-iterative methods and incorporate experimental data at multiple levels of fidelity to quantify model uncertainties. Efficient deployment of these methods in exascale supercomputers will provide scientists and engineers with unprecedented capabilities for predictive simulations of mechanical systems in applications ranging from bioengineering to manufacturing. DCoMEX exploits the computational power of modern exascale architectures, to provide a robust and user friendly framework that can be adopted in many applications. This framework is comprised of AI-Solve library integrated in two complementary computational mechanics HPC libraries. The first is a general-purpose multiphysics engine and the second a Bayesian uncertainty quantification and optimisation platform. We will demonstrate DCoMEX potential by detailed simulations in two case studies: (i) patient-specific optimization of cancer immunotherapy treatment, and (ii) design of advanced composite materials and structures at multiple scales. We envision that software and methods developed in this project can be further customized and also facilitate developments in critical European industrial sectors like medicine, infrastructure, materials, automotive and aeronautics design.

Facts

| 1 | Project Title: | Data Driven Computational Mechanics at Exascale |

| 2 | Project Number: | 956201 |

| 3 | Project Acronym: | DCoMEX |

| 4 | Starting date: | 01/04/2021 |

| 5 | Duration in months: | 36 |

| 6 | Call Identifier: | H2020-JTI-EuroHPC-2019-1 |

| 7 | Type of Action: | EuroHPC-RIA |

| 8 | Topic: | EuroHPC-01-2019 |

| 9 | Fixed keywords: | High performance computing; Scientific computing and data processing |

| 10 | Free keywords: | Data Driven Computational mechanics, Exascale Computing, Manifold Learning, Fault Tolerance, Scalability, Heterogeneous CPU+GPU computing, Bayesian analysis, Uncertainty Quantification |

Objectives

The DCoMEX is comprised of the following Strategic Objectives (SO):

SO1: Construction of AI-Solve an AI-enhanced linear algebra library

(i) SO1.1: Development of a set of innovative machine learning (ML) methods for dimensionality reduction and surrogate modelling, including the diffusion maps (DMAP) manifold learning and deep neural networks (DNN).

(ii) SO1.2 Development of AI-Solve library fusing data-driven methods and surrogate models with efficient block-iterative sparse linear system solvers.

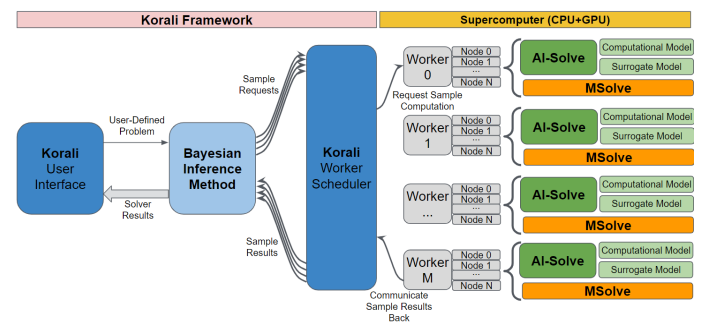

SO2: Exascale deployment of MSolve and Korali software engines.

(i) SO2.1: Optimisation of MSolve to fully utilise the combined CPU and GPU potential of modern supercomputers. Demonstrate software capabilities by providing performance and correctness test on physics-based computational models.

(ii) SO2.2: Extension of the Korali UQ and Bayesian analysis framework to include state of the art load-balanced sampling algorithms that harness efficiently extreme computation architectures.

SO3: Pre-processing of experimental image data

(i) SO3.1: Development of 3D image and data processing routines that extract geometries together with

estimates of their uncertainties that can be propagated to predictive simulators.

SO4: Integration of the DCoMEX framework, application and performance evaluation.

(i) SO4.1: Integration of the combined Korali + AI-Solve + MSolve machinery. (a) Application to the

immunotherapy problem. (b) Application to the multi-scale material design.

(ii) SO4.2: Performance evaluation of DCoMEX algorithms in extreme-scale computing environments, with emphasis on scalability, energy-awareness, fault-tolerance and error-resilience.

(iii) SO4.3: Provide modular and customisable software that can be used by the broader scientific community as well as by SMEs interested in computational mechanics problems.

SO5: Scientific contributions and dissemination.

(i) SO5.1: Application of the DCoMEX framework to optimise patient specific cancer immunotherapy.

(ii) SO5.2: Application of the DCoMEX framework to the multiscale material design.

(iii)SO5.3: Dissemination of DCoMEX as a novel approach applicable to engineering problems with a need for extreme computing.

Coordinator

The National Technical University of Athens is the oldest and largest educational institution of Greece in the field of technology. NTUA has a firm commitment to research thanks to its personnel and its extensive infrastructure, state-of-the-art laboratories and computing facilities. The personnel of NTUA have provided a broad list of publications in the international literature with high scientific impact and a large number of doctorate students are currently undertaking their PhD programs in research fields related to the project. Past EU funding includes numerous H2020, FP6 and FP7 projects, five ERC IDEAS Grants and many Marie-Curie networking projects.

The NTUA team consists of two research groups: The Multidisiplinary Computation Mechanics Research group (MGroup) which belongs to the Institute of Structural Analysis and Antiseismic Research (ISAAR) of the School of Civil Engineering of NTUA and the Computing Systems Laboratory from the School of Electrical and Computer Engineering of NTUA.

About Consortium

The proposed project seeks, primarily to develop a novel data driven and AI-enhanced linear algebra library for solving linear systems of equations and integrate it into a software platform capable of handling very demanding computational mechanics problems, designed for exascale performance and bring to common practice these complicated and up to date restrictive analysis and design of complex and/or multiscale/multiphysics systems. The project is strongly interdisciplinary and as a consequence, the consortium is extensive in that it covers the basic mathematical, engineering and computer science backgrounds through to the medical and industrial applications. The consortium also reflects the breadth of the proposed project in that it consists of a strong and well-balanced blend of HPC centers and Universities. The consortium is defined by leading experts in academia and applied science. Expertise is being drawn from all of these resources in order to ensure that the project meets its intended objectives.

The participants in the project are drawn from Greece, Switzerland, Cyprus and Germany, so the consortium represents a cross-section of central and south European countries. The consortium covers such a number of countries, as to ensure that the required skills at the correct level are available to the project, rather than the skills being developed as a part of the project.

In respect of the underpinning science required for the success of the project, the consortium is well founded with the University and Research Institutes all having a long and solid background in simulation-based applied science and engineering, computer science and Technology and health sciences (NTUA, ETHZ, TUM, UCY, GRNET). In order to achieve the desired objectives, effective research needs to start at day one and the academic partners within the consortium ensure that this will be the case.

-

- NTUA brings in expertise in a number of topics addressed by the project. More specifically, the project coordinator and leader of NTUA group has a vast experience in computational mechanics and HPC and together with his team will further develop and integrate MSolve Multiphysics platform in the project. NTUA also contributes with a small ICT team from the electrical engineering Department bringing expertise in resource management and code performance optimisation.

-

- The ETHZ/CSELab partners will contribute in equal parts to developing novel statistical methods for efficient Bayesian analysis at extreme scale; and also providing support for HPC-related optimisations for the computational support and testing and simulation at peta and exascale. The leader of ETHZ group has a vast experience in HPC and UQ and Bayesian analysis and together with his team will further extend and integrate Korali UQ and BA software in the project. The NTUA and ETHZ/CSELab teams will work together for integration of AI-Solve with MSolve and Korali.

-

- TUM will contribute their 3D image processing software and algorithms, and clinical research data for showcaseing DCoMEX’s impact in a clinical domain. The TUM partner has extensive experience also in tumour growth modelling and the development of probabilistic models for uncertainty quantification and, as such, will contribution to the translational case studies that link biomedical research and HPC model development.

-

- UCY will bring their expertise on biomechanical and systems biology modelling for cancer immunotherapy prediction and optimisation. The UCY and the NTUA team will close together for further developing this modelling in MSolve platform.

-

- The NTUA, UCY, TUM and ETHZ/CSELab teams will work together for the robust validation of the model to a large number of preclinical data collected during an ERC Starting grant of the UCY leader. Furthermore, the NTUA, TUM and ETHZ/CSELab teams will work together for the robust validation of the material design application to a number of experimental data collected during a National grant in which the leader of NTUA is the PI.

-

- Finally, ETHZ/CSCS and GRNET HPC centers will provide resource allocation (GPU+CPU test beds) and they will bring their expertise in resource allocation, low level code integration and optimisation and performance evaluation of the project codes.

Members

National and Technical University of Athens

The NTUA team consists of two research groups: The Multidisiplinary Computation Mechanics Research group (MGroup) which belongs to the Institute of Structural Analysis and Antiseismic Research (ISAAR) of the School of Civil Engineering of NTUA and the Computing Systems Laboratory from the School of Electrical and Computer Engineering of NTUA.

The Multidisiplinary Computation Mechanics Research group (MGroup, http://mgroup.ntua.gr/) is a numerical simulation Lab specialized in large scale computational mechanics problems. MGroup is part of the Institute of Structural Analysis and Seismic Research of the Civil Engineer school of the NTUA (ISAAR). MGroup was founded by Asc Professor V. Papadopoulos and consists of 3 Faculty members 4 Post-doctoral students and 10 PhD students. Research activities of MGroup are related to material modeling, multiscale analysis and design, multiphysics, uncertainty quantification, optimum design, reduced order modeling, machine learning and artificial intelligence, accelerated computing and HPC. MGroup has developed MSolve software, an open source general purpose computational mechanics solver customized to solving large scale multiscale and/or multiphysics problems (https://github.com/isaar/MSolve).

Computing Systems Laboratory research activities span several areas of large-scale parallel and distributed systems, high performance computing, scientific applications, sparse computations, data management, computer architecture, operating systems. In more detail, it has a strong research background and a long term experience concerning parallel large-scale architectures (HPC, Cloud, Grid and P2P computing), application optimization, scheduling, distributed data management and high performance computing. Its members have an academic record of more than 300 research publications in the field in international journals and conferences.

ΕTH Zurich

The ETH Zurich team consists of two members: The Computational Science and Engineering Laboratory (CSElab) in the Department of Mechanical and Process Engineering and the Swiss National Supercomputing Center (CSCS).

The Computational Science and Engineering Laboratory (CSElab) in the Department of Mechanical and Process Engineering (DMAVT) was founded in 2000 by Professor Petros Koumoutsakos. The CSElab carries out research and develops computational tools for the study of complex scientific and engineering problems. Research areas include Multiscale Modeling and Simulation, Reinforcement Learning, Recurrent Neural Networks, Bayesian Uncertainty Quantification, Sampling and Optimization algorithms and High Performance Computing (HPC). Application domains include Life Sciences, Fluid Mechanics and Nanotechnology. Professor Koumoutsakos is a Foreign Member of the US National Academy of Engineering (NAE). He led the teams that won the 2013 Gordon Bell award in Supercomputing and was among the four inalists in 2015. Alumni of the CSE Lab are currently professors at Universities such as MIT, UIUC, TU Munich. The CSElab has produced 1 monograph, 3 edited volumes, 8 book chapters, and over 350 publications in international journals and conference proceedings.

The Swiss National Supercomputing Center (CSCS) develops and operates cutting-edge high-performance computing systems as an essential service facility for Swiss researchers. These computing systems are used by scientists for a diverse range of purposes – from high-resolution simulations to the analysis of complex data. Simulation is considered the third pillar of science, alongside theory and experimentation, and scientists from an increasing number of disciplines are using high-performance computing simulation for their research. For example, supercomputers are used to model new materials with hitherto unknown properties. Climate modelling and weather forecasting would be impossible without them. In social science, simulations can help prevent mass panic by predicting people’s behavior. In medicine, computer simulations aid diagnostics help improve treatment methods. Moreover, they can facilitate risk assessments for natural hazards such as earthquakes and the tsunamis they might trigger. CSCS has a strong track record in supporting the processing, analysis and storage of scientific data, and is investing heavily in new tools and computing systems to support data science applications. For more than a decade, CSCS has been involved in the analysis of the many petabytes of data produced by scientific instruments such as the Large Hadron Collider (LHC) at CERN. Supporting scientists in extracting knowledge from structured and unstructured data is a key priority for CSCS.

University of Cyprus

The Umiversity of Cyprus is represented by the Cancer Biophysics Laboratory (CBL).

CBL was established in 2014 with the support of an ERC Starting grant (ERC-2013-StG-336839 ReEngineeringCancer, €1.44M, 2014-2018) and it is further supported by an ERC Consolidator Grant (ERC-2019-CoG-863955, Immuno-Predictor, €2.0M, 2020-2025). The focus of the lab is the application of principles from engineering and biology in order to investigate the barriers to the effective delivery of drugs to solid tumours and develop new therapeutic strategies in order to optimize the delivery and efficacy of cancer medicines with a focus on immunotherapy. CBL is a vibrant and internationally competitive research environment where novel computational approaches are combined with state-of-the-art experimental techniques to develop rules for the optimal design of delivery systems as well as strategies to remodel the tumour microenvironment so that drugs become more accessible to the tumour.Τhe highlights of CBL’s research include (i) the contribution to the development of a new therapeutic strategy that targets/normalizes specific components of the tumour microenvironment, (ii) the development of specific rules for the use of nanoparticles in oncology and (iii) the development of mathematical models based on mechanics and systems biology to simulated the response of solid tumors to various cancer therapies. Apart from Dr. Stylianopoulos, current lab members include 8 senior scientists and postdoctoral fellows, 3 PhD students and 1 lab technician.

Technical University of Munich

The Technische Universität München (Technical University of Munich) is one of the leading technical universities in Europe, with a unique profile in its core domains natural sciences, engineering, life sciences and medicine. The institutional strategy is focused on strengthening the excellence of disciplinary core competences in research, teaching and learning, but is also targeted towards the promotion of ground breaking, interdisciplinary research. TUM inspires and pro-actively empowers its students, academics, and alumni to think and act entrepreneurially. It initiates growth-oriented start-ups and assists its entrepreneurs in building new companies. The TUM Department of Computer Science has consistently been ranked as the top school in computer science in Germany and among the top 5 schools within Europe. TUM has a strong focus on research at the intersection of clinical and biomedical research with the engineering disciplines and the natural sciences. In the seventh European Union Research Framework Program and in Horizon 2020, TUM was involved in more than 300 EU research projects, coordinated approx. 40 of them and participated in 75 ERC Grants in total so far. Menze was PI in FP7 Visceral and several national research initiatives.

Greek Research and Technology Network

GRNET – National Infrastructures for Research and Technology (GRNET S.A. http://www.grnet.gr) is a state-owned company, operating under the auspices of the Greek Ministry of Digital Governance. It is an integrated electronic Infrastructure provider, whose mission is to support high quality e-Infrastructure services to the academic, research and educational community of Greece, link these with global e-Infrastructures, as well as disseminate ICT to the general public. GRNET is currently operating the Greek national HPC Tier-1 center, offering a 180 TFlops computing system, 2 PB of parallel file storage, as well as respective application support and training services. GRNET also coordinates the Greek National Grid Initiative – HellasGrid, which federates a number of Grid sites in the country. It is one of the core participants in EGI and the related EGI-Engage project, where it operates a number of pan-European core services; while in EGEE (Enabling Grids for E-SciencE) projects series it also ran the Regional Operations Centre for South–East Europe. It has been involved in coordinating Grid activities in SEE (15 countries) for 6 years through the 3 phases of SEE–GRID projects. GRNET has been a core partner in EUMEDGRID, EUCHINAGRID, CHAIN and CHAIN-REDS projects, stimulating computing developments globally. Regarding policy issues in computing infrastructures, GRNET was represented as a partner in the eIRG-SP projects series and EGI-DS. GRNET is the National Research and Education Network (NREN) provider for the R&E community in Greece, with over 11 Petabytes of raw disk storage and 7 Petabytes of tape archive. It is one of the core data centers of EUDAT data infrastructure and partner in the EUDAT2020 project, heavily involved in infrastructure operations. Moreover, GRNET coordinates and operates the GRNET CERT and GRNET Federation for the Greek Authentication and Authorization Infrastructure.

Working Package 1

| 1 | Working Package number: | WP1 |

| 2 | Working Package Title: | Management |

| 3 | Duration: | M1 – M36 |

| 4 | Lead beneficiary: | NTUA |

The work in this WP will be carried out in the following task:

Task 1.1: Project management, Participants: NTUA, ETHZ/CSELab, ETHZ/CSCS, UCY, TUM, GRNET, Duration: M1-M36

In this task, the administrative and financial issues of the consortium are performed. These include coordination of the project, reporting supervision and intra-consortium communication support. The project coordination supervises administratively all documentation and reports to the Commission with the help of the task leaders. In particular, it supervises the financial status of the project, negotiates contractual and administrative issues or possible project modifications, revises project planning when appropriate, allocates project resources and schedules tasks in the case that modifications turn out to be necessary or advantageous. Primarily it aims at preventing any deviation from the original work plan (e.g., by ensuring that results from the WPs are available on time and are of high quality). The consortium management ensures that each task leader is being supported adequately in his responsibility for administrative coordination and performance control of the Task-related operations, (e.g., meetings, cost-statements, summary reports, deliverables etc.) ensuring that adequate resources are at the disposal of the project. Regarding the scientific progress of the project, the reporting procedure (both internal and to the EC) has been established accordingly (see Deliverables). Regarding the financial progress, NTUA will follow-up the project expenses and track deviations in coordination with the accounting system, and using the audit certificates system. The project cost management includes the processes required to ensure that the project is completed within the approved budget. Concerning cost control for the project, NTUA will abide by the Commission requirements, including monitoring of the cost performance to detect deviations from the original plan, accurate recording in the cost baseline, preventing incorrect, inappropriate, or unauthorised changes of the contract.

All the required reporting documents to the EC will be prepared within this WP by the project coordinator who will be the contact point to the European Commission. Moreover, relevant research activities performed at European and international level in the field of exascale computing will be closely monitored throughout the duration of the project. Contacts with the organisations performing such research activities will be established through the Coordinator aiming to avoid work duplication, to maximise the project efficiency and to activate synergies between projects.

The objectives of this WP are the following:

(i) To ensure effective planning, implementation, coordination and realisation of the project activities, including successful completion of the tasks and timely production of deliverables.

(ii) To manage and monitor the research, evaluation, dissemination and exploitation activities carried out in the work packages.

(iii) To support decision-making, internal and external communications, encourage greater accountability and control, minimise risk, identify and exploit project related opportunities.

(iv) To provide and ensure high quality standards in the project work.

(v) To protect IPR that will arise during the implementation of the project.

The Deliverables are:

D1.1: First year progress periodic report (report) (M12)

D1.2: Second year progress periodic report (report) (M24)

D1.3: Third year progress final report (report) (M36)

Working Package 2

| 1 | Working Package number: | WP2 |

| 2 | Working Package Title: | Surrogate Modelling |

| 3 | Duration: | M22 – M22 |

| 4 | Lead beneficiary: | NTUA |

This work package focuses on implementing recent theoretical and computational advancements of machine learning methods for developing a novel surrogate modelling strategy. A Bayesian sampling scheme will be adopted to identify the initial set of parameter values, which will produce the corresponding system solutions upon which the surrogate will be built. To handle the extreme dimensionality of the data set, the nonlinear dimensionality reduction algorithm of the DMAPs will be applied to the data set to reduce its size, as described in module 2. To improve the accuracy of the DMAP algorithm, variable bandwidth kernels will be implemented. Then, an innovative encoder-decoder will be constructed that uses deep NNs to map new parameter values to the low-dimensional DMAP space and GH or RBF to map them to the high-dimensional solution space. To ensure the quality of the surrogate, an additional sampling scheme will be developed, which will take into account the geometry of the solution space, by utilising the DMAP metric to identify areas of the solution manifold not adequately delineated and, subsequently, to provide the specific parameter values needed to remedy this.

WP2 is led by NTUA that will be responsible for the implementation of the DMAP-based surrogate and will coordinate all communication efforts between the consortium members involved. The work is divided into the following tasks:

Task 2.1: Implementation of the data-informed sampling scheme, Participants: ETHZ/CSELab, Duration: M12-M18

The TMCMC algorithm will be used in this task in order to sample the posterior distribution of a Bayesian inference problem. A relatively low number of samples will be used in this phase. For each parameter sampled in the parameter space, all the relevant system responses will be stored for the construction of the surrogate model.

Task 2.2: DMAP implementation, Participants: NTUA, Duration: M2-M8

This task focuses on the implementation of the DMAP algorithmic framework. The algorithm will take as input a set of solutions from the complex model and provide a low-dimensional parameterization of the data set. The basic algorithm’s accuracy will be improved using the idea of variable-bandwidth kernels. Also, an automated procedure will be developed, that will produce the optimal kernel coefficients for the DMAP algorithm relying on the data set’s geometric properties and will not require any choices by the user. The DMAP algorithm will be part of a surrogate modelling library, which will be developed as an autonomous module and integrated with Korali.

Task 2.3: Implementation of the DMAP-based surrogate, Participants: NTUA, ETHZ/CSELab, Duration: M9-M16

To build the DMAP-based surrogate, the following mapping steps will be developed. The first mapping will rely on NNs and will send new parameter vectors to their images in the low-dimensional DMAP space at minimum cost. To reconstruct the high-dimensional system solutions from their coordinates in the DMAP space, specific implementations of the GH and RBF will be developed and tested. Similar to task 2.2, NN, GH and RBF interpolation will be parts of the surrogate modelling library.

Task 2.4: Implementation of the surrogate refinement strategy, Participants: NTUA, ETHZ/CSELab, Duration: M17-M20

An algorithm will be developed, capable of identifying areas of the solution manifold that require higher resolution. The DMAP metric will be utilised for this purpose, since it gives a better approximation of the ‘true’ distance between two points on the manifold. Pairwise distances between a point in the DMAP space and its k-nearest neighbours will be investigated to locate areas with rapid variations in the solution. Once these areas are identified, specific parameter vectors will be chosen, that will enrich the solution manifold where needed.

Task 2.5: Implementation of a surrogate validation strategy, Participants: NTUA, ETHZ/CSELab, Duration: M21-M22

In order to test the performance of the proposed surrogate methodology an additional set of system solutions will be acquired for various parameter vectors. The solutions obtained from the surrogate for these vectors will be compared to the exact ones, and if the error, under some appropriate metrics, is below a threshold, the surrogate will be deemed adequate for use in the AI-Solve. Otherwise the previous steps will be more accurately calibrated.

The objectives of this WP are the following:

(i) To develop a data-informed sampling scheme.

(ii) To develop a solution-informed sampling scheme.

(iii) To develop a DMAP-based surrogate for extreme dimensional systems.

The Deliverables are:

D2.1: DMAP algorithm prototype (software module) (M8)

D2.2: DMAP surrogate model (report) (M20)

D2.3: DMAP implementation and validation (report) (M22)

Working Package 3

| 1 | Working Package number: | WP3 |

| 2 | Working Package Title: | AI-Solve |

| 3 | Duration: | M4 – M28 |

| 4 | Lead beneficiary: | NTUA |

This work package focuses on combining theoretical developments and state-of-the-art implementations of a set of solution methods for FEM-based physics informed computational models with innovative manifold learning methods in order to construct an innovative block-iterative solver that can drastically reduce the computational load, mitigate data transfers, recover from hardware errors and support energy-aware operation. To this direction, special focus will be given to: (i) DDM and AMG preconditioning, (ii) coarse problem formulations, (iii) usage of WP2 surrogate model for the coarse problem solution and preconditioning enhancements, (iv) data storage and partitioning, (v) communication avoiding strategies, (vi) inexact algorithms, (vii) hardware failure mitigation, (viii) energy-awareness and efficiency, (ix) validity, (x) scalability to a large number of nodes and cores, (xi) heterogeneity of the compute platform, (xii) robustness to input, (xiii) robustness to noise in data.

WP3 is led by NTUA which will be responsible for the design and implementation of AI-Solve and will drive the efforts for inexact solvers and communication optimisation. ETHZ/CSCS and GRNET will focus on hardware fault tolerance and energy awareness/minimisation. All three partners will have close collaboration in almost all the tasks of the work package. The work is divided into the following tasks:

Task 3.1: DDM preconditioners for exascale systems, Participants: NTUA, ETHZ/CSELab, Duration: M1- M10

A set of DDM preconditioners including PD-DDM and AMG will be investigated and implemented for accelerating the convergence of the solvers of Task 3.3. Some of these methods also incorporate direct solvers for the solution of small local problems, further promoting data-locality and communication reduction. All the aforementioned methods will be distributed and will be specifically designed to efficiently handle sparse data.

Task 3.2: Surrogate models for preconditioning and coarse problem solution, Participants: NTUA, ETHZ/CSELab, UCY, Duration: M1-M20

The WP2 surrogate model set of algorithms will be utilized in order to enhance the DDM preconditioners of Task 3.1. Specifically, DDM rely on the solution of a coarse problem in order to provide numerical scalability. While the coarse problem is “small” compared to the fine one, it can grow quite large as well. In this task, we will tailor the surrogate model to provide quick solutions for the coarse problem. Moreover, as an alternative, we will explore the use of the surrogate as a replacement of DDM. Such surrogate-based solution approaches are based on the automatic construction of search vectors of a global coupled and/or multiscale Galerkin problems, from an extrapolation of a very low cost DMAP surrogate model. Such problem guarantees the weak scalability of the technique even without local preconditioning, while still allowing to take full advantage of the capability of local preconditioners.

Task 3.3: Inexact block-iterative solvers for scalability and error resilience, Participants: NTUA, ETHZ/CSELab, Duration: M5-M2

A series of block-iterative solution algorithms will be implemented in order to be enhanced by the strategies devised in Tasks 3.1 and 3.2. These solvers will be further enhanced in order to exploit inexact computing strategies and tolerate errors, while also employing inexact arithmetic techniques for the DDM preconditioners. The foundational algorithmic framework is based on mixed precision iterative refinement where the bulk of the computations can be evaluated with accuracy that is greatly relaxed. First the accuracy of computations will be relaxed followed by the accuracy of data representation and finally, the accuracy of data movement over memory and network will be relaxed. The accuracy relaxation for data movement will be simulated, in the absence of actual hardware with these characteristics. All findings and observations will be input to WP6 and in particular in Task 6.2 for the design of the system architecture.

Task 3.4: Scalable sparse computations, Participants: NTUA, ETHZ/CSELab, Duration: M5-M24

Sparse computations (and in particular SpMV) are expected to be one of the dominant execution kernels in the DCoMEX platform. SpMV is a highly memory-bound kernel in large-scale simulations. In this task, SpMV will be optimised in order to mitigate data movements by applying aggressive data compression, make use of the memory subsystem in NUMA configurations, apply vectorisation to optimise computations and specifically adapt to accelerator hardware, including Nvidia’s Tensor cores. The work will be based on the extension of SparseX, a highly optimised library for sparse computations implemented by consortium members.

Task 3.5: Communication optimisation, Participants: NTUA, ETHZ/CSELab, Duration: M5-M24

Powerful partitioning algorithms based on state-of-the-art methods on hypergraph partitioning will be employed in order to partition data so as to minimise the communication effort between the nodes. Moreover, powerful asynchronous communication protocols, tailored to DCoMEX applications will also be designed. In more detail, communication patterns of the exascale targeted solvers will be optimised, based on the following properties and techniques:

a) Usage of block-iterative solvers (i.e.: Task 3.3).

b) Overlap of computation and communication.

c) Utilisation of relaxed precision communication to save bandwidth.

d) Introduction of asynchronicity in order to minimise latency penalties.

Task 3.6: Fault tolerant, energy-aware operation, Participants: NTUA, ETHZ/CSELab, Duration: M5-M30

In this task, extensions to both preconditioners and solvers developed in Tasks 3.1 to 3.3 will be developed where vector updates and information communication in each iteration can be random, depending on failure rates of the exascale system in which they are to be deployed. This will allow us to lay down strong theoretical foundations of scalable fault-tolerant methods – particularly in the situation when some of the nodes may fail and never recover again (or, no recovery within a reasonable time – before the computational task is supposed to be completed). Scalable code will be made publicly available. Moreover, appropriate policies and mechanisms to inject energy awareness to the DCoMEX platform operation, and especially on the execution of the solver methods that dominate the execution time (and energy consumption) will be investigated. This will provide mechanisms to measure energy consumption either explicitly from the relevant monitoring facilities or implicitly with the use of appropriate power and energy models, analyse this information to locate components of energy waste (e.g., memory, network) and take relevant actions towards more energy efficient operation (e.g., DVFS, thread throttling etc).

Task 3.7: Algorithmic implementation of AI-Solve and considerations for the exascale, Participants: NTUA, ETHZ/CSELab, GRNET, ETHZ/CSCS, Duration: M7-M36

This task will implement and integrate all algorithms and techniques developed in Tasks 3.1 – 3.6 into a software module that will be further optimised in Task 6.5 and integrated to the MSolve/Korali platform in Task 6.4.

The objectives of this WP are the following:

(i) To design and implement AI-Solve, an AI-enhanced linear system of equations solver

(ii) To design and implement scalable strategies for efficient execution of AI-Solve on exascale systems

(iii) To inject energy awareness to the operation of the AI-Solve library on MSolve/Korali platform

(iv) To further enhance scalability and power usage by minimising communication.

The Deliverables are:

D3.1: AI-Solve library prototype (software module) (M20)

D3.2: AI-Solve library prototype (report) (M20)

D3.3: AI-Solve performance evaluation (report) (M28)

Working Package 4

| 1 | Working Package number: | WP4 |

| 2 | Working Package Title: | UQ and Bayesian Aanaysis |

| 3 | Duration: | M2 – M28 |

| 4 | Lead beneficiary: | ETHZ/CSELab |

Task 4.1: Development of a novel hierarchical Bayesian sampling method, Participants: NTUA, ETHZ/CSELab, Duration: M2-M12

A novel methodology for the sampling of complex Bayesian graphs will be developed in this task. These graphs will be the result of modelling the posterior Bayesian distributions accounting for inhomogeneous data and models with multiple scales. Generalizations and combinations of existing techniques, such as the IS and the SAEM algorithms will be used in order to accelerate the sampling procedure.

Task 4.2: Extension of the Korali language for the description of general Bayesian direct acyclic graphs, Participants: NTUA, ETHZ/CSELab, Duration: M2-M10

The descriptive language of Korali will be extended in order to accommodate the description of complex Bayesian problems. The dependence of the variables in the posterior distribution will be given as a DAG. We will integrate an open-source DAG library for verification of graph loop absence, and use it throughout the engine to make sure no variable interdependencies generate a deadlock. Finally, the posterior distribution of the HBM will be constructed and be ready for sampling with any sampling algorithm.

Task 4.3: Development of a load-balanced scalable sampling algorithm, Duration: M2-M10

Research and develop a new stochastic method for sampling based on TMCMC that allows full scalable parallelisation but also prevents, to the maximum extent possible, the problem of load imbalance among samples in the same sampling generation.

Task 4.4: Integration of the new methods in the Korali framework, Participants: NTUA, ETHZ/CSELab, Duration: M12-M18

The algorithms developed in Task 4.1 will be implemented in Korali, along with a user-friendly interface that provides the maximum generality allowed by the algorithm.

The objectives of this WP is to develop new exascale-ready methods for sampling the posterior distribution of hierarchical Bayesian networks. The algorithm will be integrated in the Korali framework.

The Deliverables are:

D4.1: A novel method for the sampling of Bayesian graphs for the inference of parameters in computationally demanding models, published in a refereed journal (M12)

D4.2: Creation, integration, and documentation of a new Korali module based on the methods developed in the previous item (M20)

D4.3: A novel load-balanced TMCMC-based method for scalable sampling (M12)

D4.4: Incremental versions of Korali, improving on performance, features, and documentation (M28)

Working Package 5

| 1 | Working Package number: | WP5 |

| 2 | Working Package Title: | Pre-processing and computational model |

| 3 | Duration: | M1 – M24 |

| 4 | Lead beneficiary: | TUM |

The work will be carried out primarily by TUM that will provide their expertise on 3D image segmentation. The work is divided into the following tasks:

Task 5.1: Algorithms for 3D geometry reconstruction from 3D images, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M1-M8

3D image data will be further processed to represent the geometrical domain captured in the 3D images. Standard (i.e., thresholding, k-means) and deep learning algorithms (i.e., 2D and 3D U-Net) will be employed for a processing of arbitrary 3D images. Data will be fed in MSolve in order to generate FEM discretizations and corresponding systems of linear equations to be solved by AI-Solve to process. The image segmentation algorithms will be pre-trained for the available applications. Interactive segmentation will also be available for adjusting the image pre-processing to new tasks, while interfaces to other pre-trained algorithms will be provided.

Task 5.2: Image-based estimation of geometric uncertainties, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M1-M12

While task 5.1 will implement image segmentation and MSolve interoperation, as well as the workflow for using these algorithms in DCoMEX and its applications, this task deals with estimating uncertainties in the geometries from the available image data. This will be done using different techniques, such as drop-out sampling, learning generative CNN models, direct learning of uncertainties, while multiple segmentations of the structures of interest in a subset of the data will also be an option. Algorithms will be integrated with those of task 5.1 so that uncertainties can be propagated through the simulation pipeline.

Task 5.3: Post-processing and visualising simulation results, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M24-M36

Simulation results – and their uncertainties – need to be summarised and visualised in the physical modelling domain and their original spatial geometries to inspect them for plausibility and to learn from them. Similarly, results such as parametric uncertainties and their correlations need to be inspected in appropriate low dimensional representations (clusters, projections) of their high-dimensional manifolds. Key outliers in the simulated parameters or modelled variables need to be detected and highlighted. Tools for enabling this post-processing and result data analytics will be provided by the consortium members and will be further optimised for the application cases studies.

The objectives of this WP are the following:

(i) To build 3D spatial models from image volumes, representing actual geometries of the problems being solved in AI-Solve.

(ii) To quantify uncertainty with respect to specific geometrical characteristics of the acquired images.

(iii) To allow fast visualisation of results in the use-case studies.

The Deliverables are:

D5.1: UQ aware image segmentation prototype (software module) (M8)

D5.2: UQ aware image segmentation final software (software module) (M12)

D5.3: Post-processing methodology (report) (M36)

D5.4: Data processing software final evaluation and documentation (report) (M36)

Working Package 6

| 1 | Working Package number: | WP6 |

| 2 | Working Package Title: | Software optimisation, integration and testing |

| 3 | Duration: | M1 – M24 |

| 4 | Lead beneficiary: | ETHZ/CSCS |

This work package will accompany and heavily interact with the core research and development work packages (WP2-WP5) throughout the entire duration of the project. It will define system requirements and implementation framework; provide rapid integration and prototyping, continuous testing and validation and low-level code optimisation.

WP6 involves the core implementation partners NTUA, ETHZ/CSELab, ETHZ/CSCS, and GRNET. This

work package is divided into the following tasks:

Task 6.1: Software and hardware requirements, Participants: ETHZ/CSELab, NTUA, GRNET, ETHZ/CSCS, Duration: M1-M6

This task will focus on the analysis of the basic software and hardware requirements of the applications and the research conducted in the project. A bottom-up approach will be adopted: The methods and applications will be broken down to their basic constituents with the purpose to map these to the well-known Berkeley Dwarfs. Next, runtime, scalability and sustained performance requirements will be analysed in conjunction with I/O and memory needs. In addition, energy and power requirements will be measured. This strategy will allow us to generate a clear and detailed atlas of the software and hardware requirements as well as to make projections for future needs. This process is considered as a crucial step towards co-design of exascale systems. In addition, the underlying goal of the task will also be to analyse the extent at which inexact computations can be integrated within the applications of the project. The Berkeley Dwarfs approach is particularly useful in this context as it will allow us to focus on selected important kernels without attempting to completely rewrite complex applications.

Task 6.2: System design and architectures, Participants: NTUA, GRNET, ETHZ/CSELab, ETHZ/CSCS, Duration: M4-M6

This task will use the input provided by Task 3.3 and propose a system design and overall architecture, on the basis of the co-design approach: A first version of the application will be proposed in the middle of the task duration and then calibrated with input from the partners as well as from the algorithmic implementation of AI-Solve (Task 3.7). In particular, the task will focus on the following targets: (i) Decide the node architecture, the number of GPU units, and the amount and structure of memory

hierarchy, (ii) suggest a network architecture that can handle the application requirements and (iii) define the required modules and their interaction to provide the overall framework functionality.

Task 6.3: Software engineering framework, Participants: ETHZ/CSELab, NTUA, GRNET, ETHZ/CSCS, Duration: M1-M6

This task will focus on providing runtime environments or tools to enable easy use of the tools developed in the project. Particularly, the task will select the software development environment and in particular the programming model. Programming models will be investigated, including (but not limited to) pure MPI, hybrid MPI/OpenMP, task-based schemas (e.g., TBBs, Cilk, OpenMP tasks, OMPSS), PGAS based schemas (e.g., UPC, Co-Array FORTRAN, etc) and accelerator-specific environments (CUDA, OpenCL, OpenACC). Once more, a bottom-up approach will be considered. Since the underlying project applications are extremely complex the AI-Solve implementation of Task 3.7 will be used in order to select particularly heavy kernels for which the various programming modes will be tested. Particular emphasis will be given to the introduction of asynchronicity as much as possible.

Task 6.4: Integration, Participants: ETHZ/CSELab, NTUA, GRNET, ETHZ/CSCS, Duration: M7-M24

The system modules and tools developed in the work packages WP3, WP4 and WP5 will be integrated based on the overall architecture defined in Task 6.2. Integration will start early in the project to ensure timely and high-quality delivery of the project’s outcomes. To this direction, three prototypes of the developed platform will be integrated, tested and evaluated (i.e., two during the implementation of the project at months 15 [baseline] and 30 [intermediate] and one at the end of the project [final]), while the individual components will be revised by the respective developing partners, where necessary. Feedback from the deployment of the applications will also drive modifications to the integrated platform, as well to the individual components and tools. For this reason, this task continues until the end of the project. Moreover, to maximise the impact of the project results, DCoMEX modules will explore interfacing with well-established, popular software packages (e.g., PETSc, etc.). Task 6.4 receives as input from WP2-WP5 the relevant modules and interacts with WP7 (Applications) and WP8 (Performance evaluation).

Task 6.5: Low-level code optimisations, Participants: NTUA, ETHZ/CSELab, ETHZ/CSCS, GRNET, Duration: M7-M24

To materialise the innovation of the developed algorithms and support scalability to the exascale, low-level code optimisations need to be heavily applied. This includes:(i) experimentation with compilers, compiler flags and library optimisation to provide the best possible configuration in terms of system software for each platform, (ii) generic low-level optimisations that cannot always be applied by the compiler (e.g., cache blocking for cache locality), (iii) system-specific optimisations (e.g., vectorisation utilising the available operations provided by the platform ISA) and iv) memory placements and management to ensure deep memory hierarchies are efficiently utilised (e.g., dynamically using MCDRAM on KNL processors based on memory access patterns, targeting non-volatile memory for data sets)

Task 6.6: Testing and benchmarking, Participants: NTUA, ETHZ/CSELab, ETHZ/CSCS, GRNET, Duration: M13-M24

The role of this task is to test and benchmark the decisions and designs from all previous tasks of WP6 on real scale applications and platforms. The task starts after the analysis tasks have been completed and it will closely interact with Task 6.4 and WPs 2, 3 and 4. Initial benchmarking results will be used during the co-design cycle to calibrate the implementation of the DCoMEX ecosystem. Large scale-out systems already available (e.g., Cray XC40 systems) will be utilised while scaling out systems that will be available at the time of the start of the task. This task will also automate the testing framework for the software developed to ensure that testing is undertaken consistently and regularly across the project and is fully integrated with the development process. Likewise, it will define a set of low level benchmarks that will integrate the numerical solvers, and also define the procedures for benchmarking the solvers and applications which will qualify the solvers for the rest of the project. Central resources will be deployed to enable easy tracking of testing and benchmarking status across the work packages using tools like Buildbot or Jenkins.

The objectives of this WP are the following:

(i) To investigate and define the prerequisite software and hardware environments required by this project.

(ii) To define the testing and benchmarking technologies, frameworks, and methodologies that will be utilised throughout the project.

(iii) Perform the integration of the Korali, MSolve, and AI-Solve packages to deliver the finished end-to-end DCoMEX platform.

(iv) To enable DCoMEX modules to interact with other well-established scientific software libraries, such as PETSc.

(v) To apply low-level performance CPU and GPU optimisations to MSolve and AI-Solve algorithms.

(vi) Distribute DCoMEX modules to the largest European and world-wide supercomputers.

(vii) To test and validate the correctness and integrity of the various modules and the DCoMEX platform.

The Deliverables are:

D6.1: System requirements, design and architecture (report) (M6)

D6.2a: DCoMEX codes baseline prototype (software module) (M15)

D6.2b: DCoMEX codes baseline prototype (report) (M15)

D6.3: DCoMEX modules integrated in MSolve/Korali (software module) (M24)

Working Package 7

| 1 | Working Package number: | WP7 |

| 2 | Working Package Title: | Applications |

| 3 | Duration: | M5 – M36 |

| 4 | Lead beneficiary: | UCY |

In this WP, two application-specific modelling frameworks will be integrated into DCoMEX platform. A detailed biomechanical and systems biology model and a material design multiscale model. Both models will be validated against available data. For the biomechanical model, robust UQ and sensitivity analysis will be performed to identify the specific biomechanical (e.g., tumour stiffness, mechanical stress, perfusion) and biochemical/biological (e.g., specific growth factors, immune cell populations) parameters of the tumour microenvironment that determine in the largest part the efficacy of immunotherapy. Subsequently, multiple simulations performed using the informed model and the AI-Solve solver so that the model will predict the range of values of these critical parameters within which immunotherapy is optimised and for the design of optimal treatment protocols. For the material design paradigm, module 1 will be used to digitally reconstruct and subsequently analyse large scale RVEs with realistic descriptions of random microstructural topologies of the various fillers and fed into the informed multiscale model of the piping system in order to optimize thermal conductivity properties.

Task 7.1: Development and integration of the mathematical and computational model into the DCoMEX framework, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M5-M36

The research teams of NTUA and UCY will work closely together to further develop the mathematical framework and for its integration into DCoMEX. The biomechanical and systems biology mathematical framework is developed by the UCY team and versions of it have been published in Nature Nanotechnology, PNAS, Cancer Research and other high-profile journals [CH12, ST13, STY13, MP17, AN18, VO19]. A systems biology approach for the interactions among growth factors, cancer and immune cells population the tumour vasculature as well as the oxygen supply and drug delivery have been developed in [MP20] and will be further extended to account for all known mechanisms. A flowchart of the model and the modules that comprise is presented in [MAH19]. This approach is coupled to solid and fluid mechanics solvers as well as to a vascular remodelling module. Furthermore, the methodology can incorporate directly imaging data from the anatomy of the tumour, the host tissue and the tumour vasculature. An incomplete version of the model is currently solved using the commercial finite element software COMSOL and because of its high computational demands it is practically impossible to run large scale and multiple simulations, which are required for the solution of the model in real geometries. The integration of the model in DCoMEX framework will address the computational needs.

Task 7.1.1: Verification and model validation with preclinical and clinical data, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M5-M36

The NTUA, UCY and TUM teams will work together for the robust validation of the model. The UCY team has collected a large number of preclinical data during an ERC Starting grant (ERC-2013-StG-336839 “ReEngineeringCancer”) (2014-2018) [MAH18, CH17, YU19, LIX19] and will continue to do so during the implementation of the ERC Consolidator grant (ERC-2019-CoG-863955, “Immuno-Predictor”) (2020 2025). Importantly, the Immuno-Predictor project focuses on the impact of the tumour microenvironment on the efficacy of immunotherapy with the use of preclinical tumour models of breast and pancreatic cancers and melanomas and involves also a clinical study in humans. Therefore, the data produced by this project will be directly used for the validation of the proposed mathematical and computational model. Human data of brain tumours will be also provided by TUM. These data include anatomical information of brain tumours and their progression during treatment, perfusion data and diffusion tensor imaging data/tractography. This information can be directly incorporated into our modelling framework to directly compare model predictions with human data. Notice that immunotherapy has been already approved for the treatment of breast cancer and melanomas and there are ongoing clinical trials for the effectiveness of immunotherapy on pancreatic and brain tumours. The accuracy of the results from mathematical and computer models of biological systems is often complicated by the presence of uncertainties in experimental data that are used to estimate parameter values. Global uncertainty and sensitivity analysis employing the data-mining algorithms of module 1 will be performed to identify the model parameters that most significantly affect the solution of the model and to what extent. The analysis will be performed by the TUM team.

Task 7.1.2: Derivation of protocols for optimised use of immunotherapy, Participants: NTUA, ETHZ/CSELab,UCY, TUM, Duration: M24-M36

Multiple simulation will be repeated varying, within the physiological/pathophysiological range, the values of the key model parameters identified by task 7.1.1 to affect the solution of the model. Also, the parameters of the tumour microenvironment will be varied to correspond to different tumour types and stages of progression. From the model predictions, a map will be constructed to depict the therapeutic outcome (i.e., extent of tumour shrinkage) as a function of the model parameters. The map will provide guidelines for optimal treatment strategies and could be also used as the basis for further clinical validation in the future which can lead to the first computational tool for the prediction of a tumour’s response to immunotherapy.

Task 7.2: Multiscale modelling of heat exchange system, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M5-M36

In this task a multiscale modelling framework of the generic heat exchange piping system of Fig. 8a will be developed. First, isolated fillers (GRF, CNT/G) will be modelled at the nanoscale using Molecular Structural Mechanics [SC10] in order to capture the effective thermal and mechanical properties as well as thermal resistance at the material interfaces. Random mesoscale RVEs, compatible with 3D image data, will then be generated using the technology developed in module 1. These models are coupled with the isolated fillers models at the previous scale forming detailed RVE FEM mesoscale models. A macroscale nested FE2 solution scheme is then constructed for the pipe, which encompasses all scales and delivers the overall thermomechanical properties of the system. An initial dataset of these off-line calculations will be used to construct a DMAP surrogate (module 2). The detailed multiscale model together with its DMAP substitute will finally construct the AI-Solve solver for this application.

Task 7.2.1: Verification and model validation, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M5-M36

In this task the results of the numerical simulations obtained in Task 7.2 will be systematically compared with experimental data. This way the basic model assumptions will be revisited, validated and updated calibrated if necessary. The hierarchical Bayesian analysis tools of module 4 will be applied for updating prior belief about the probabilistic description of the uncertainty parameters used in the stochastic modelling of the random RVEs to a posteriori belief. In particular, the information obtained at the fine scale will be utilised in order to estimate the posterior distribution of the random variables of the coarse scale. This way the samples generated at the finer scales will be compatible to available information at the upper scales.

Task 7.2.2: Optimum design of materials – next generation RCs, Participants: NTUA, ETHZ/CSELab, UCY, TUM, Duration: M24-M36

The aim of this task is to use the DCoMEX platform with the AI-Solve customised for this application, in order to compute RC compositions with mixed CNT/G/GRTF fillers with optimum thermal conductivities and fair mechanical properties. As a next step, optimum designs of RCs reinforced with 3D structured networks of SGs, SCNTs and/or CNS will be evaluated leading to next generation RC compositions with optimal thermal and mechanical properties.

Task 7.3: DCoMEX-BIO and DCoMEX-MAT commercialisation plans, Participants: NTUA,

ETHZ/CSELab, UCY, TUM, Duration: M20-M36

Our proposed plan hinges on working closely with software development companies, medical systems companies, material developers and investors. We plan to visit and present the DCoMEX-BIO and DCoMEX-MAT products to international investors (e.g., venture capital funds), software companies and industrial end user companies (medical, aerospace, automotive, material developers) to attract their interest with the aim to establish commercial agreements or even licensing or co-developing the product with them. We will explore the possibilities of creating a spin-off or a start-up company or licensing/co-developing the software product with a software and/or a medical systems company in the case of DCoMEX-BIO, or a material developer in the case of DCoMEX-MAT. For both products, a commercialisation plan will be developed within this project but the actual commercialisation of DCoMEX products goes beyond the scope and timeline of the proposed work.

The objectives of this WP are the following:

(i) To apply the DCoMEX framework to the prediction and optimisation of cancer immunotherapy.

(ii) To apply the DCoMEX framework to the material design of heat-exchange systems.

The Deliverables are:

D7.1a: DCoMEX-BIO (software module) (M30)

D7.1b: DCoMEX-BIO (report) (M30)

D7.2a: DCoMEX-MAT (software module) (M30)

D7.2b: DCoMEX-MAT b (M30)

D7.3: Protocols for optimum cancer immunotherapy treatment (M36)

D7.4: Optimal material composition for heat exchange applications (M36)

D7.5: DCoMEX-BIO and DCoMEX-MAT commercialisation plan (report) (M36)

Working Package 8

| 1 | Working Package number: | WP8 |

| 2 | Working Package Title: | Performance Evaluation |

| 3 | Duration: | M10 – M36 |

| 4 | Lead beneficiary: | GRNET |

This work package will be carried out by GRNET with the collaboration of the other core implementation partners (NTUA, ETHZ/CSELab). This work package is divided into the following tasks:

Task 8.1: Evaluation and improvement of efficiency and scalability, Participants: NTUA, ETHZ/CSELab, GRNET, ETHZ/CSCS, Duration: M8-M36

This task is responsible for evaluating the software and infrastructure produced throughout the project in terms of scalability, parallel efficiency, energy efficiency, and data locality. For the project to be successful it is necessary that the produced solutions are orders of magnitude more efficient and scalable than existing technologies. The integrated system, the individual solvers, and the applications built to utilise them will be evaluated with existing solutions to obtain comparative performance metrics, including time to solution, Flop/s rates, scalability, and communication costs across a range of platforms, including accelerators. Another important part of the task will be to evaluate the energy efficiency of the solution provided. Energy efficiency can generally be inferred from Flop/s rates, but such an approach does not take into account the costs of data transferred across networks, and to and from disks (or other I/O storage systems), as well as wasted Flop/s (Flop/s available but not utilised), and the requirements of pre-processing of data for the various approaches. Energy measurement hardware available on Piz Daint enables fine-grained measurement of per node and per GPU energy consumption, and will be utilised to evaluate the energy efficiency of the solvers on small and large HPC systems.

Task 8.2: Evaluation of fault tolerance and error resilience, Participants: NTUA, ETHZ/CSELab, GRNET, Duration: M8-M36

Exascale systems are predicted to have high failure rates, both in terms of hard faults (failure of components in the system that terminates part of the simulation) and soft faults (silent or otherwise corruption of data that does not terminate that part of the simulation). The solvers that are being implemented in this project are designed to have some resilience to faults and errors, the work of this task will be to evaluate those tolerances in practice, either simulating hardware faults or data corruption to enable us to characterise the level of faults the software can cope with, and compare that with documented fault levels on existing systems [SC14]. The initial work in this task will be to investigate and setup/create the fault simulation environment for the project. It is likely to either use a virtual machine monitor type setup (such as Palacios [PAL]) to enable such investigations at scale on large parallel system and tailor the faults that injected into the system, or use a gdb based system [CHE17] to inject faults directly into the memory of the running program. A range of faults will be investigated, from corruption of memory and communication messages, to failure of memory, cores, or nodes in the system. This evaluation will also be extended to working with the end user code DCoMEX-BIO, when available, to evaluate the impact of faults on results and the variation of outcomes based on faults.

Feedback with the results of the evaluation steps Task 8.1 and this Task 8.2 will be provided to the algorithmic development WPs and particularly Tasks 3.1-3.6 (solvers algorithms) and 4.1-4.4 (UQ and Byesian analysis algorithms).

The objectives of this WP are the following:

(i) Serial and parallel efficiency.

(ii) Fault tolerance and resilience.

(iii) Data locality.

The Deliverables are:

D8.1: Performance evaluation of DCoMEX-BIO prototype (report) (M36)

D8.2: Performance evaluation of DCoMEX-MAT prototype (report) (M36)

Working Package 9

| 1 | Working Package number: | WP9 |

| 2 | Working Package Title: | Communication, dissemination and exploitation |

| 3 | Duration: | M1 – M36 |

| 4 | Lead beneficiary: | ETHZ/CSELab |

These activities of this work package involve all the partners. In particular ETHZ/CSELab, as project coordinator will lead the communication activities, NTUA will lead the dissemination activities and TUM-UCY the exploitation activities. The work in this work package is broken down into the following tasks:

Task 9.1: Communication activities, Participants: NTUA, ETHZ/CSELab, UCY, TUM, GRNET, ETHZ/CSCS, Duration: M1-M36

The goals of this task are: (i) to raise awareness within different target communities and the general public, (ii) to demonstrate progressively the project concept and system functionalities to key stakeholders at European level, (iii) to manage the attendance to relevant conferences and the production of publications in order to attain maximum effectiveness, (iv) to involve new end-user communities and IT providers and (v) to pave the way for exploitation of project results. To achieve this goal a rich set of diverse activities will be implemented, including the project website, blogcasts, videos, brochures & fliers, focused reports, announcements as described in detail in Section 2.3.

Task 9.2: Dissemination activities, Participants: NTUA, ETHZ/CSELab, UCY, TUM, GRNET, ETHZ/CSCS, Duration: M1-M36

The dissemination efforts will initially concentrate on raising the awareness of respective user communities

and IT experts in the objectives and expected results of the project. This will be heavily supported by the

communication activities in Task 8.1. The dissemination strategy after the first year of the project and until its end will evolve more aggressively towards the spreading of the project results, the formulation of active user communities and potential HPC vendors and IT customers. DCoMEX will follow a dual dissemination strategy including publication in highly acclaimed international journals and conferences and by providing open-access (with the green open access model) to the project results.

Task 9.3: Exploitation activities and planning, Participants: NTUA, ETHZ/CSELab, UCY, TUM, GRNET, ETHZ/CSCS, Duration: M1-M36

This task will promote a business culture to each partner, helping in shaping the sustainable futures for each of the project results, either as single partner or as a whole. A joint exploitation plan will be developed on the basis of a comparative analysis with respect to other potential competitors based on the current market trends and perspectives. An initial joint exploitation plan heavily based on open-source exploitation is described in detail in Section 2.2.a.

The objectives of this WP are the following:

(i) To create core messaging around DCoMEX towards target groups.

(ii) To plan and orchestrate dissemination actions for DCoMEX-BIO results.

(iii) To build and pursue an effective exploitation strategy for sustainable use of DCoMEX-BIO results.

The Deliverables are:

D9.1: DCoMEX website: The fully functional DCoMEX website (M3)

D9.2: Communication, dissemination and exploitation plan (report) : Detailed planning on the strategy for communication, dissemination and exploitation activities (M6)

D9.3: Communication, dissemination and exploitation report and plan (report): Detailed report on the relevant activities in the first 18 months of the project and redesign the strategy (if this is necessary) according to the results of the project and the status of the relevant markets (M18)

D9.4: Communication, dissemination and exploitation report (report): Detailed report on the relevant activities in the period M19-M42 of the project (M36)

D9.5: Data management plan (report): Details on the management policies for data that will be

produced during the project. DCoMEX will participate in the Open Research Data Pilot of H2020 (M6)

Deliverables

Publications

2022

- S. Nikolopoulos, I. Kalogeris, V. Papadopoulos. Machine learning accelerated transient analysis of stochastic nonlinear structures. Engineering Structures, 2022. [Link]

- S. Bakalakos, M. Georgioudakis, M. Papadrakakis. Domain Decomposition Methods for 3D crack propagation using XFEM. Computer Methods in Applied Mechanics and Engineering, 2022. [Link]

- I. Ezhov, M. Rosier, L. Zimmer, F. Kofler, S. Shit, J.C. Paetzold, K. Scibilia, F. Steinbauer, L. Maechler, K. Franitza, T. Amiranashvili, M.J. Menten, M. Metz, S. Conjeti, B. Wiestler, B. Menze. A for-loop is all you need. For solving the inverse problem in the case of personalized tumor growth modeling. Proceedings of Machine Learning Research, 2022. [Link]

2023

- K. Tzirakis, C.P. Papanikas, V. Sakkalis, E. Tzamali, Y. Papaharilaou, A. Caiazzo, T. Stylianopoulos, V. Vavourakis. An adaptive semi-implicit finite element solver for brain cancer progression modeling. International Journal for Numerical Methods in Biomedical Engineering, 2023. [Link]

- L. Papadopoulos, S. Bakalakos, S. Nikolopoulos, I. Kalogeris, V. Papadopoulos. A computational framework for the indirect estimation of the interface thermal resistance of composite materials using XPINNs. International Journal of Heat and Mass Transfer, 2023. [Link]

- S. Pyrialakos, I. Kalogeris, V. Papadopoulos. Multiscale analysis of nonlinear systems using a hierarchy of deep neural networks. International Journal of Solids and Structures, 2023. [Link]

- L. Amoudruz, A. Economides, G. Arampatzis, P. Koumoutsakos. The stress-free state of human erythrocytes: Data-driven inference of a transferable RBC model. Biophysical Journal, 2023. [Link]

- I. Ezhov, K. Scibilia, K. Franitza, F. Steinbauer, S. Shit, L. Zimmer, J. Lipkova, F. Kofler, J.C. Paetzold, L. Canalini, D. Waldmannstetter, M.J. Menten, M. Metz, B. Wiestler, B. Menze. Learn-Morph-Infer: A new way of solving the inverse problem for brain tumor modeling. Medical Image Analysis, 2023. [Link]

- M.C. Metz, I. Ezhov, J.C. Peeken, J.A. Buchner, J. Lipkova, F. Kofler, D. Waldmannstetter, C. Delbridge, C. Diehl, D. Bernhardt, F. Schmidt-Graf, J. Gempt, S.E. Comps, C.

Zimmer, B. Menze, B. Wiestler. Toward image-based personalization of glioblastoma therapy: A clinical and biological validation study of a novel, deep learning-driven tumor growth model. Neuro-Oncology Advances, 2023. [Link]

2024

- S. Nikolopoulos, I. Kalogeris, G. Stavroulakis, V. Papadopoulos. AI-enhanced iterative solvers for accelerating the solution of large-scale parameterized systems. International Journal for Numerical Methods in Engineering, 2024. [Link]

- L. Papadopoulos, K. Atzarakis, G. Sotiropoulos, I. Kalogeris, V. Papadopoulos. Fusing nonlinear solvers with transformers for accelerating the solution of parametric transient problems. Computer Methods in Applied Mechanics and Engineering, 2024. [Link]

- E. Ioannou, M. Hadjicharalambous, A. Malai, E. Papageorgiou, A. Peraticou, N. Katodritis, D. Vomvas, V. Vavourakis. Personalized in silico model for radiation-induced pulmonary fibrosis. Journal of the Royal Society Interface, 2024. [Link]

- P. Karnakov, S. Litvinov, P. Koumoutsakos. Solving inverse problems in physics by optimizing a discrete loss: Fast and accurate learning without neural networks. PNAS Nexus, 2024. [Link]

- JP. von Bassewitz, S. Kaltenbach, P. Koumoutsakos. Improving the accuracy of Coarse-grained Partial Differential Equations with Grid-based Reinforcement Learning. ICML AI4Science Workshop, 2024. [Link]

2025

- S. Pyrialakos, I. Kalogeris, V. Papadopoulos . An efficient hierarchical Bayesian framework for multiscale material modeling. Composite Structures, 2025. [Link]

Accepted / Under Review

- H. Gao, S. Kaltenbach, P. Koumoutsakos. Generative Learning for the Effective Dynamics of Complex High-dimensional Systems. Nature Communications (Accepted). [Preprint]

- M. Balcerak, J. Weidner, P. Karnakov, I. Ezhov, S. Litvinov, P. Koumoutsakos, R.Z. Zhang, J.S. Lowengrub, I. Yakushev, B. Wiestler, B. Menze. Individualizing Glioma Radiotherapy planning by Optimization of a Data and Physics-Informed Discrete Loss. Under Review. [Preprint]

- E. Menier, S. Kaltenback, M. Yagoubi, M. Schoenauer, P. Koumoutsakos. Interpretable learning of effective dynamics for multiscale systems. Under Review. [Preprint]

- S. Kaltenbach, P.S. Koutsourelakis, P. Koumoutsakos. Interpretable reduced-order modeling with time-scale separation. Under Review. [Preprint]

- JP. von Bassewitz, S. Kaltenbach, P. Koumoutsakos. Closure Discovery for Coarse-grained Partial Differential Equations with Grid-based Reinforcement Learning. Under Review. [Preprint]

- J. Weidner, I. Ezhov, M. Metz, S. Litvinov, S. Kaltenbach, M. Balcerak, L. Feiner, L. Lux, F. Kofler, J. Lipkova, J. latz, D. Rueckert, B. Menze. A learnable prior improves inverse tumor growth modeling. Under Review. [Preprint]

- M. Reche-Vilanova, S. Kaltenbach, P. Koumoutsakos, H.B. Bingham, M. Fluck, D. Morris, H.N. Psaraftis. Predictive surrogates for Aerodynamic Performance of Wind Propulsion System Configurations. Under Review.

- F. Catto, E. Dadgar-Kiani, D. Kirschenbaum, A. Economides, A.M. Reuss, C. Trevisan, D. Caredio, D. Mirzet, L. Frick, U. WeberStadblauer, S. Litvinov, P. Koumoutsakos, J.H. Lee, A. Aguzzi. Quantitative 3D histochemistry reveals region-specific amyloid-β reduction by the antidiabetic drug netoglitazone . Under Review. [Preprint]

- H. Gao, S. Kaltenbach, P. Koumoutsakos. Generative Learning of the Solution of Parametric Partial Differential Equations using Guided Diffusion Models and Virtual Observations. [Preprint]

News

Press Contact

Contact for press inquiries:

Ioannis Kalogeris

National and Technical University of Athens

ikalog@mail.ntua.gr

+30 6944711928

![BMBF_CMYK_Gef_M [Konvertiert]](http://mgroup.ntua.gr/wp-content/uploads/2022/03/BMBF_gefoerdert_2017_en-300x206.jpg)

Social Media

Facebook:https://www.facebook.com/dcomex.eu/

Twitter:https://twitter.com/dcomex_eu